|

|

Pilot Study

We conducted a pilot study at the Intelligent Robotics and Communication laboratories at ATR, Japan, to evaluate the expression recognition system in unconstrained environments. Subjects interacted in an unconstrained manner with RoboVie, a communication robot developed at ATR and the University of Osaka (Ishiguro, 2001).

|

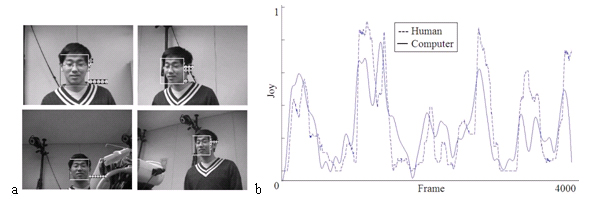

Figure 3. a. Facial expression is measured during interaction with the Robovie robot from the continuous output of four video cameras. b. Mean human ratings compared to automated system outputs for 'joy' (one subject).

|

To improve performance of the system we simultaneously recorded video from 4 video cameras. 14 paid participants recruited from the university of Osaka were invited to interact with RoboVie for a 5 minute period. Faces were automatically detected and facial expressions classified independently on the four cameras. This resulted in a 28 dimensional vector per video frame (7 emotion scores x 4 cameras), The output of the 4 cameras was then combined using a standard probabilistic fusion model. To assess the validity of the system, four naive human observers were presented with the videos of each subject at 1/3 speed. The observers indicated the amount of happiness shown by the subject in each video frame by turning a dial, a technique commonly used in marketing research. Figure 3 compares human judgments with the automated system. The frame-by frame correlation of the human judges averaged across subjects and judge pairs was 0.54, The average correlation between the 4 judges and the automated system was 0.56, which does not differ significantly from the human/human agreement (t(13) = 0.15, p<0.875). Figure 3b shows frame by frame the average scores given by the 4 human judges for a particular subject, and the scores predicted by the automatic system. We are presently evaluating the 4 camera version of the system as a potential new tool for research in behavioral and clinical studies.

|