Past News

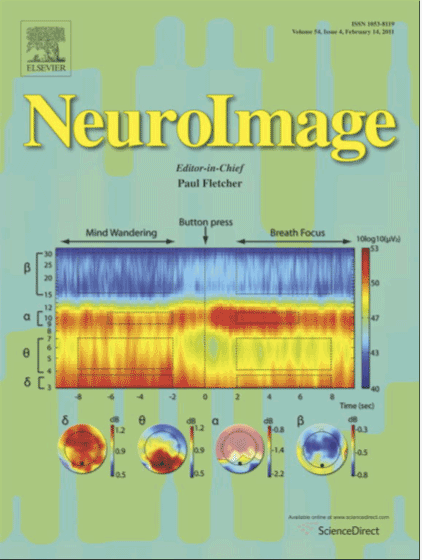

4/1/19 – Supercomputers Aid Our Understanding of Complex Brain Waves

Features Drs. Scott Makeig and Arnaud Delorme! More...

3/5/19 – UC San Diego named a 2019 NEA Research Lab

Drs. John Iversen, Tim Brown, and Terry Jernigan, in partnership with San Diego Children's Choir and Vista Unified School District, will study the potential effects of musical interventions on early childhood development. Press Release...

10/25/18 – Dr. Terrence Sejnowski is interviewed for a podcast

"How to Start a Deep Learning Revolution" (Brain Inspired) (see #15 on the following webpage)...

10/16/18 – A pioneering scientist explains ‘deep learning’

Dr. Terry Sejnowski discusses his new book, The Deep Learning Revolution. (The Verge) More...

9/19/18 – Physicists Train Robotic Gliders to Soar like Birds

Researchers used reinforcement learning to train gliders to autonomously navigate atmospheric thermals. The study, a collaboration between UC San Diego, the Salk Institute and Abdus Salam International Center for Theoretical Physics in Italy, was published in the Sept. 19 issue of “Nature." Dr. Terry Sejnowski, a co-author of the study, explains, "This paper is an important step toward artificial intelligence." (UC San Diego) More...

September 2018 – Sound Health: Music and the Mind

TDLC investigator Dr. John Iversen spoke at Music and the Mind, held at The Kennedy Center on September 7-8, 2018. The program brought together artists and neuroscientists to explore connections between music, rhythm, and brain development. Read about the event on The Kennedy Center website, or download the flyer here!

7/18/18 – New Summer Internship Underway at UC San Diego (10 News)

Dr. Leanne Chukoskie founded a program that has hired dozens of paid interns diagnosed with autism. The interns develop computer apps that can help others with autism, as well as anyone trying to improve motor skills and focus.

6/22/18 – Can science-based video games help kids with autism?

Discusses work at UC San Diego's Research on Autism and Development (RAD) Laboratory with Director, Jeanne Townsend, and Associate Director, Leanne Chukoskie. (Originally published on Spectrum)

3/8/18 – Scientists Construct Google-Earth-like Atlas of the Human Brain

Ruey-Song Huang of UC San Diego and Martin Sereno of San Diego State University have produced a new kind of atlas of the human brain that, they hope, can be eventually refined and improved to provide more detailed information about the organization and function of the human brain.

2/8/18 – New '4-D Goggles' Allow Wearers to be 'Touched' by Approaching Objects

The goggles have been developed by Ruey-Song Huang of UC San Diego and Martin Sereno of San Diego State University.

1/2/18 – Congratulations Dr. Leanne Chukoskie, for being featured in San Diego Magazine!

The article title: 15 Coolest Jobs in San Diego and How to Get Them

12/2017 – Congratulations Dr. Terry Sejnowski, for being elected as a National Academy of Inventors (NAI) Fellow!

12/12/17 – What Learning Looks Like: Creating A Well-Tuned Orchestra In Your Head

Dr. John Iversen discusses the neuroscience of music and its effect on brain development in school age children!

07/11/17 – UC San Diego Part of International Team to Develop Wireless Implantable Microdevices for the Brain

Institute for Neural Computation (INC) Co-Directors Drs. Terry Sejnowski and Gert Cauwenberghs are part of the international collaboration led by Brown University to develop a wireless neural prosthetic system that could record and stimulate neural activity with unprecedented detail and precision. Researchers envision that the wireless neural prosthetics could lead to new medical therapies for people who have lost sensory function due to injury or illness.

05/2017 – Drs. Terrence Sejnowski and Barbara Oakley have launched a new Massive Online Open Course (MOOC)

Drs. Terrence Sejnowski and Barbara Oakley have launched a new Massive Online Open Course (MOOC)

Dr. Terrence Sejnowski and Dr. Barbara Oakley have launched a new Massive Online Open Course (MOOC). Their MOOC for Coursera called "Learning How to Learn," has already enrolled 1.8 million learners. As a follow-up, they launched a new course in April 2017 called "Mindshift: Break Through Obstacles to Learning and Discover Your Hidden Potential." They are also writing a "Learning How to Learn" book for children ages 10-13, to be available in April 2018.

05/1/17 – Researchers Receive $7.5 Million Grant to Study Memory Capacity and Energy Efficiency in the Brain

Researchers Receive $7.5 Million Grant to Study Memory Capacity and Energy Efficiency in the Brain

Researchers Receive $7.5 Million Grant to Study Memory Capacity and Energy Efficiency in the Brain (5/1/17)

Dr. Terrence Sejnowski is part of the team, led by Dr. Padmini Rangamani.

04/20/17 – Sensor-Equipped Glove Could Help Doctors Take Guesswork Out of Measuring Spasticity

Sensor-Equipped Glove Could Help Doctors Take Guesswork Out of Measuring Spasticity

Sensor-Equipped Glove Could Help Doctors Take Guesswork Out of Measuring Spasticity (4/20/17)

Sensor-Equipped Glove Could Help Doctors Take Guesswork Out of Measuring Spasticity (4/20/17)

INC's Leanne Chukoskie is part of an interdisciplinary team of researchers at UCSD and Rady Children’s Hospital that has developed a sensor-equipped glove that could help doctors measure stiffness during physical exams. (Photo: Erik Jepsen/UC San Diego)

03/01/2017 – John Iversen Explores our Perception of Musical Rhythm

John Iversen Explores our Perception of Musical Rhythm

John Iversen Explores our Perception of Musical Rhythm (3/1/17)

The Scientist article features TDLC's Dr. John Iversen. It profiles his work on the neural mechanisms of rhythm perception, where he has demonstrated the active role of the brain in shaping how a listener perceives a rhythm. Iversen's other TDLC work examines the impact of music on child brain development, with TDLC researcher Terry Jernigan, and was awarded an NSF science of learning grant to explore the next generation of EEG data collection in the classroom (with TDLC researchers Tzyy-Ping Jung and Alex Khalil). > Click here to read more about his research!

> Click here for his interview on Voice of La Jolla!

11/19/16 – Learning to Move and Moving to Learn

Learning to Move and Moving to Learn

Learning to Move and Moving to Learn (11/9/16)

Several INC scientists have been awarded an NSF grant to study how physical movement can be used to identify children with learning disabilities. (IUB Newsroom).

09/19/16 – A Global Unified Vision For Neuroscience

A Global Unified Vision For Neuroscience

A Global Unified Vision For Neuroscience (9/19/16)

TDLC's Drs. Terry Sejnowski and Andrea Chiba participated in an exciting Global Brain Initiative Meeting at Rockefeller University in New York on Sept. 19, 2016. More

09/15/16 – 'Princess Leia' brainwaves help sleeping brain store memories

'Princess Leia' brainwaves help sleeping brain store memories

'Princess Leia' brainwaves help sleeping brain store memories (Salk Institute, 9/15/16)

INC Co-Director Dr. Terrence Sejnowski, with fellow Salk scientists including Dr. Lyle Muller, co-authored the study.

09/10/16 – Team Goblin wins first place in the inaugural San Diego Brain

Team Goblin wins first place in the inaugural San Diego Brain Hackathon!

(09/10/16) Team Goblin wins first place in the inaugural San Diego Brain Hackathon!

Drs. John Iversen and Alex Khalil, along with Joseph Heng (visiting Masters student from Switzerland) made up "Team Goblin. More

2016 – Qusp [now Intheon] Recognized on the TransTech200 List

The TransTech 200 is the annual list of the key innovators who are driving technology for mental and emotional wellbeing forward. Qusp (now known as Intheon) was founded by former INC member Tim Mullen and is listed on the 2017 TransTech 200.

(8/29/16 – Todd Hylton joins UC San Diego Contextual Robotics Institute

Todd Hylton joins UC San Diego Contextual Robotics Institute

Todd Hylton, well-known San Diego scientist and entrepreneur, joins UC San Diego Contextual Robotics Institute (he is also a member of affiliate faculty at INC) (8/29/16)

08/25/16 – Neuroscientists stand up for basic cell biology research

Neuroscientists stand up for basic cell biology research

(08/25/16) Neuroscientists stand up for basic cell biology research

Dr. Terry Sejnowski co-authored the article published in the journal Science.

01/19/2016 – Listening to Waves - A Program to Learn Science Through Music

Listening to Waves - A Program to Learn Science Through Music

The Listening to Waves program was developed from the research Victor Minces and Alexander Khalil did at the Temporal Dynamics of Learning Center (TDLC). The goal of this program is for participants to" learn the hidden and ubiquitous world of waves through the making and analysis of musical sound." Read more here ...

10/21/2015 – UC MERCI Highlight - Mozart and the Mind- John Iversen

UC MERCI Highlight - Mozart and the Mind- John Iversen

Great minds in music cognition research gathered for a stimulating colloquium organized by UC MERCI (Dr. Scott Makeig, Director) in collaboration with Mozart & the Mind

01/19/2016 – Salk study expands brain memory capacity estimate

Storage capacity of synapses much greater than previously believed

The study, published in the journal eLife, also sheds light on how the brain can manage massive amounts of data with very little energy, and avoids conceptual traps that can stymie machine learning algorithms, said Bartol, the study's first author. Noted brain researcher Terry Sejnowski was senior author. The study can be found at j.mp/synapti.

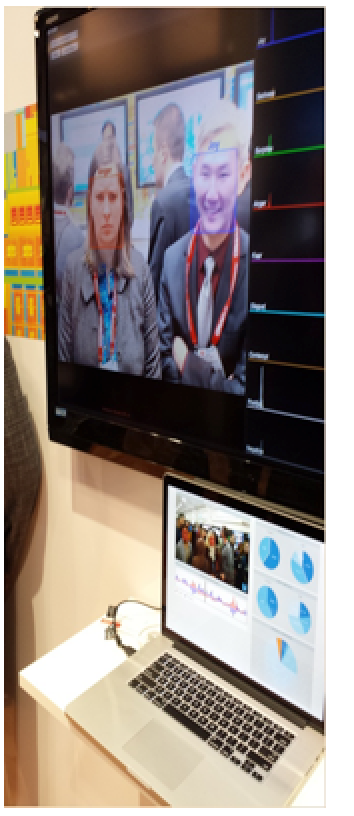

01/15/2016 – Apple acquires artificial-intelligence startup Emotient.

Apple purchases Emotient, a start up founded by six scientists from UC San Diego (INC / MPLab) in 2012

Apple's purchase of Emotient fuels artificial intelligence boom in Silicon Valley

The arms race in Silicon Valley is on for artificial intelligence.

Facebook is working on a virtual personal assistant that can read people's faces and decide whether or not to let them in your home. Google is investing in the technology to power self-driving cars, identify people on its photo service and build a better messaging app.

Now Apple is adding to its artificial intelligence arsenal. The iPhone maker purchased Emotient, a San Diego maker of facial expression recognition software that can detect emotions to assist advertisers, retailers, doctors and many other professions.

01/12/2016 – Brain monitoring takes a leap out of the lab

Brain monitoring takes a leap out of the lab

(Drs. Scott Makeig and Tzyy-Ping Jung (SCCN) are co-authors of the study, as is TDLC's Tim Mullen and INC Co-Director Gert Cauwenberghs.)

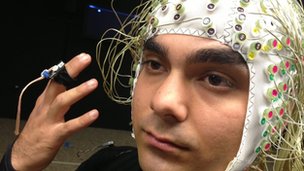

Bioengineers and cognitive scientists have developed the first portable, 64-channel wearable brain activity monitoring system that's comparable to state-of-the-art equipment found in research laboratories.

The system is a better fit for real-world applications because it is equipped with dry EEG sensors that are easier to apply than wet sensors, while still providing high-density brain activity data. The system comprises a 64-channel dry-electrode wearable EEG headset and a sophisticated software suite for data interpretation and analysis. It has a wide range of applications, from research, to neuro-feedback, to clinical diagnostics.

The researchers' goal is to get EEG out of the laboratory setting, where it is currently confined by wet EEG methods. In the future, scientists envision a world where neuroimaging systems work with mobile sensors and smart phones to track brain states throughout the day and augment the brain's capabilities.

01/14/2016 – Apple acquires artificial-intelligence startup Emotient.

Apple acquires artificial-intelligence startup Emotient.

An iPhone that can 'feel' your pain? Apple's latest acquisition could make it happen.

The company has reportedly purchased AI startup Emotient.

Apple has reportedly acquired artificial-intelligence startup Emotient, giving it access to technology that could one day imbue its devices with the ability to "read" people's emotions through their facial expressions.

Emotient's emotion-recognition technology derives from the Machine Perception Lab at the University of California at San Diego and has focused primarily on helping advertisers understand viewer reactions to their ads.

04/28/2015 – Facial Expression Recognition Technology Video

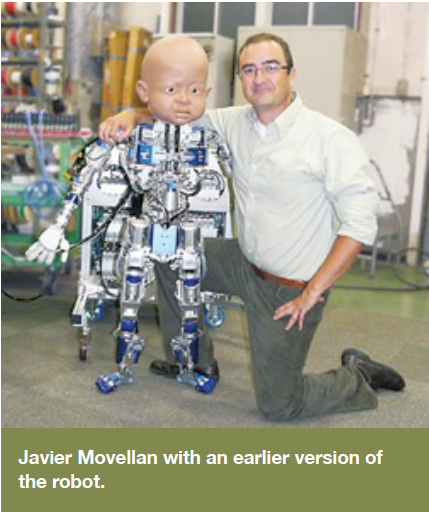

Drs. Bartlett and Movellan speak about commercializing facial expression recognition technology

Marian Bartlett and Javier Movellan speak about commercializing facial expression recognition technology fostered by (TDLC / INC) at UC San Diego. Credit: UCSD Technology Transfer Office.

08/14/2014 – Salk Institute laboratory head talks new role in brain cells

Salk Institute laboratory head talks new role in brain cells

Scientists at the Salk Institute are gaining new understanding of how the human brain truly works. Researchers now say cells called astrocytes play a major role in certain types of memories. These cells make up about half of your brain, and it was previously thought they only acted as a support system for neurons. Now, scientists say these cells are responsible for helping you recognize people, places and things from the past.

Professor Terrence Sejnowski of the Salk Institute talked live on KUSI News Thursday night about what this discovery means for future treatments of neurological disorders. More ...

07/28/2014 – Astrocytes, The Brain's Lesser Known Cells, Get Some Cognitive Respect

Astrocytes, The Brain's Lesser Known Cells, Get Some Cognitive Respect

When something captures your interest, like this article, unique electrical rhythms called gamma oscillations sweep through your brain.

These gamma oscillations reflect a symphony of cells—both excitatory and inhibitory—playing together in an orchestrated way. Though their role has been debated, gamma waves have been associated with higher-level brain function, and disturbances in the patterns have been tied to schizophrenia, Alzheimer's disease, autism, epilepsy and other disorders.

Now, new research from the Salk Institute shows that little known supportive cells in the brain known as astrocytes may in fact be major players that control these waves. More ...

04/25/2014 – Man vs. Computer: Which Can Best Spot Pain Fakers?

Man vs. Computer: Which Can Best Spot Pain Fakers?

Researchers at the University of California, San Diego, are working on a breakthrough that could change how doctors treat patients and their pain. Many doctors' offices have started displaying charts with faces showing various levels of pain but what if a person is faking it? More ...

04/13/2014 – Salk Researchers' Work Explains How Brain Selects Memories

Salk Researchers' Work Explains How Brain Selects Memories

By Chris Jennewein

Scientists at the Salk Institute in La Jolla have created a new model of memory that explains how neurons retain select memories a few hours after an event.This new framework provides a more complete picture of how memory works, which can inform research into disorders liked Parkinson’s, Alzheimer’s, post-traumatic stress and learning disabilities.

The work is detailed in the latest issue of the scholarly journal Neuron. “Previous models of memory were based on fast activity patterns,” said Terrence Sejnowski, holder of Salk’s Francis Crick Chair and a Howard Hughes Medical Institute Investigator. “Our new model of memory makes it possible to integrate experiences over hours rather than moments.”

03/13/2014 – SCCN researchers receive the "Best Paper Award" at EMBC'2013

UC San Diego Start-Up Emotient Shows the Face of New Technology

By Paul K. Mueller

The start-up Emotient is a prime example of how industry, academia, and venture capital can combine to create a groundbreaking business.

The basic technology arose in UC San Diego's Machine Perception Laboratory, led by Javier R. Movellan, a Research Scientist in the Institute of Neural Computation. Movellan and his colleague Marian Bartlett pioneered the automation of facial coding using computer vision and machine learning.

Supported by entrepreneur Ken Denman and investor Seth Neiman of Crosspoint Venture Partners, the Emotient team led by Movellan has created the Emotient API, a sophisticated facial-recognition technology with applications in the health care, retail, and entertainment industries.

In retail, the Emotient API technology allows store owners to assess customer service and quickly enhance their customers' experience. In health care, the technology provides an opportunity for physicians to better engage patients through online video calls, and may help in diagnosing depression and other mental disorders. In video games, the Emotient API allows awareness of gamers' emotional and physical responses, so the content and pace of games can be changed to generate unique and personalized enhancements.

"Seth Neiman pointed me to the UC San Diego team," said Denman, now Emotient's chief executive officer. "He told me they were the most published, the most experienced, the most enthusiastic researchers doing this work."

Movellan credits the university for the smooth and efficient start-up process.

"There was a genuine will to help Emotient innovate," he said. "UC San Diego is amazing at working on interdisciplinary science. They were really innovative in helping computer scientists, psychologists, and entrepreneurs collaborate."

Denman also credits the university's Technical Transfer Office (TTO) and its associate director William Decker.

"I worked with William Decker, and I was very impressed with his knowledge, skill, and ability to get things done and to keep his commitments and be reasonable in his negotiations overall," Denman said. "I was very pleasantly surprised."

Emotient now joins the long list of start-ups – currently more than 180 -- that the TTO has helped to establish, Decker said.

"Ken Denman is an innovator, and a pleasure to work with. We hope he considers other technologies now under way at UC San Diego."

Media Contact

Paul K. Mueller, 858-534-8564, pkmueller@ucsd.edu

Karen Cheng, 858-822-3276, klc004@ucsd.edu

01/20/2014 – INC research lab (MPLAB) interviewed by zdnet.com

The future of shopping: When psychology and emotion meet analytics

Jan 20th, 2014

By Larry Dignan for Between the Lines

Summary: The best part about the retail sector is that it combines four fun areas: Business, technology, and human behavior and psychology. Here's a tour of what may be coming to a store near you.

The future of shopping is going to look a lot more analytical in the near future and add a good bit of video to track emotions and engagement.

Welcome to the future of retail, which is quickly moving beyond somewhat silly questions about whether tablets will run on Android, iOS, or Windows, and becoming much more focused on actual applications and sales.

The best part about the retail sector is that it combines four fun areas: Business, technology, and human behavior and psychology. Here's a brief tour of technologies that range in maturity from those that are implemented today to ones that'll take awhile to be adopted.

Many of the aforementioned technologies were pulled together by Intel, which has a large retail technology unit as well as an Internet of Things division. The two areas are increasingly merging. Intel realized long ago that analytics and the Internet of Things is going to drive a lot of server and processor sales.

Emotion tracking meets retail

At Intel's booth at the National Retail Federation annual retail powwow in New York City, one of the more popular demonstrations revolved around Emotient, a startup based in San Diego. In a nutshell, Emotient captures your facial expressions, gauges your emotions, and turns that data into actionable items for a retailer.

For instance, if a consumer walks up to a display and looks frustrated, retailers will know that something needs to be tweaked. Joy would prod the retailer to buy more of that good. Stores could send help and personnel to folks depending on their emotional response to an item.

Marian Bartlett, founder and lead scientist at Emotient, said her company's analytics software — based on artificial intelligence and pattern recognition — is being used for research as well as product testing, say an emotional response to a fragrance. Emotient launched its products in June and has pilots in fast food, automotive, and health care. In a shopping context, Emotient "aims to measure emotion and tell the store managers that someone is confused in aisle 12", said Bartlett.

Marian Bartlett, founder and lead scientist at Emotient, said her company's analytics software — based on artificial intelligence and pattern recognition — is being used for research as well as product testing, say an emotional response to a fragrance. Emotient launched its products in June and has pilots in fast food, automotive, and health care. In a shopping context, Emotient "aims to measure emotion and tell the store managers that someone is confused in aisle 12", said Bartlett.

My take: Emotient could have a killer app for retail, but may freak people out. The data is anonymous, but facial recognition has a bit of a Minority Report feel to it. Personally, I doubt millennials will care. Others may fret about privacy; the key for retailers and consumer product good companies will be to be transparent and dangle carrots so shoppers won't sweat revealing how they really feel about something.

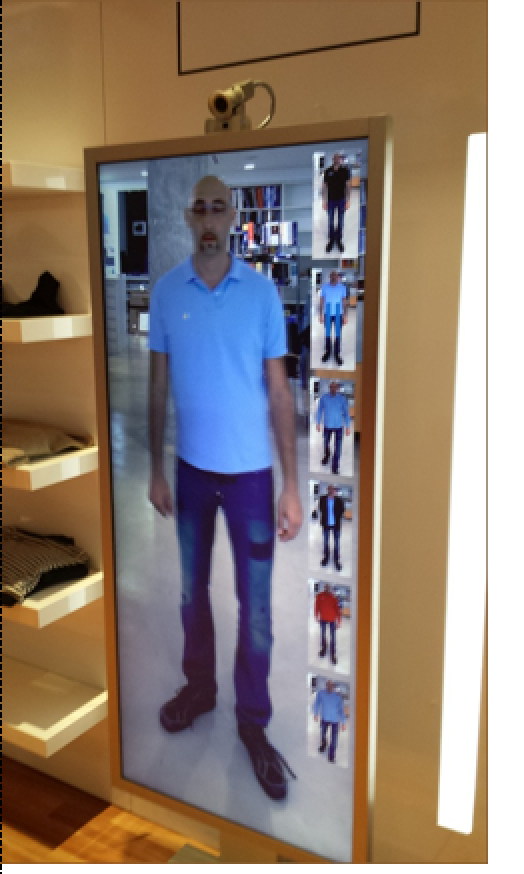

Mirror, mirror

Intel partner MemoMi also demonstrated a memory mirror that allows you to try on multiple outfits, compare them, and then share with peers. The mirror is controlled by hand gestures. The biggest difference with the MemoMi mirror is that it actually seemed like it would fit into a normal shopping flow. Similar displays at previous NRF shows were a bit clunky.

My take: The magic mirror approach could work and is getting closer to being rolled out at retailers. Any retailer wanting to boost engagement via multiple channels would be interested. Magic mirrors are getting there.

Kinect in retail

Microsoft displays often revolve around how Kinect could be used in retail. The software giant also highlighted a large retail Surface tabletop display that integrated personalization and the shopping experience.

My take: Microsoft has a good chance of turning Kinect into a business tech staple. The biggest challenge is that these large touch displays and virtual mirrors haven't gone mainstream. Fortunately for Microsoft, most retailers are Windows shops, so it'll have plenty of opportunities to broaden its reach.

Upscale vending machines that provide an experience

Signifi, a privately held company from Canada, was outlining "automated retail spot shops". As far as technology goes, Signifi is more of an integrator. CEO Shamira Jaffer said the company's spot shops sit "in the middle of the website and store". Jaffer's aim is to combine big screens, social media, interactive features, and ambiance to create an experience that boosts sales and serves as a marketing vehicle, too. "These don't feel like vending machines," said Jaffer. "We work with the retailer to design them."

Call it automated retailing.

Indeed, Signifi has been talking to Rolex about the possibilities for spot shops. Rolex? Sure, the company wants to brand and wants more outlets with less overhead. Signifi will provide hardware, software, and support. The retailer handles inventory and stocking the machines. Another perk: The spot shops can take returns, too. "We provide a retail store in a box," she said.

My take: These spot shops could proliferate in the US, a country that so far only has three. There are more spot shops in Europe. BMW is one brand testing the concept, and Jaffer said that locations for spot shops go beyond airports and transportation hubs. Hospitals, which have a bevy of folks waiting around, and universities are also good locations for high-end brands and spot shops.

Video analytics meets store staffing

Scopix, a company demonstrating at the Intel booth, scans a store via video, rates whether a customer is engaged, and then provides real-time data so a retailer can close a sale. The Scopix technology is also used for queue management and predictive analytics to find patterns. One use case would be that the Scopix system could ping a mobile device on a store floor so employees could keep customers happy.

My take: Scopix has interesting technology, but it's unclear how it stands out. Video surveillance and analytics tools were everywhere at the NRF conference.

We'll give you discounts to watch our TV commercial

Actv8.me demonstrated technology that used audio cues to combine mobile applications from TV networks with commercials and direct response. Here's how it would work — and has worked in a few pilots. A TV watcher is sitting on the couch with a tablet and an app from Fox (or CBS, parent of ZDNet). The app and tablet listen to the TV ad and call up products such as an outfit worn by an actress. For watching the ad, consumers would get flipped a coupon, say a 20 percent discount for a store visit and 10 percent deal for an online sale. Intel provides the ad-serving technology.

Actv8 also announced a deal with NCR to bring its personalized proximity platform to kiosks and multiple industries.

My take: Actv8 could find an audience from media giants given that the technology also works for on-demand commercials. Actv8's technology could track engagement with TV ads, maintain rates, and even add revenue shares for sales.

10/20/2013 – INC research (MPLAB) acknowledged by nytimes.com

The Rapid Advance of Artificial Intelligences

Oct 20th, 2013

by JOHN MARKOFF

nytimes.com

A gaggle of Harry Potter fans descended for several days this summer on the Oregon Convention Center in Portland for the Leaky Con gathering, an annual haunt of a group of predominantly young women who immerse themselves in a fantasy world of magic, spells and images.

The jubilant and occasionally squealing attendees appeared to have no idea that next door a group of real-world wizards was demonstrating technology that only a few years ago might have seemed as magical.

The scientists and engineers at the Computer Vision and Pattern Recognition conference are creating a world in which cars drive themselves, machines recognize people and "understand" their emotions, and humanoid robots travel unattended, performing everything from mundane factory tasks to emergency rescues.

C.V.P.R., as it is known, is an annual gathering of computer vision scientists, students, roboticists, software hackers — and increasingly in recent years, business and entrepreneurial types looking for another great technological leap forward.

The growing power of computer vision is a crucial first step for the next generation of computing, robotic and artificial intelligence systems. Once machines can identify objects and understand their environments, they can be freed to move around in the world. And once robots become mobile they will be increasingly capable of extending the reach of humans or replacing them.

Self-driving cars, factory robots and a new class of farm hands known as ag-robots are already demonstrating what increasingly mobile machines can do. Indeed, the rapid advance of computer vision is just one of a set of artificial intelligence-oriented technologies — others include speech recognition, dexterous manipulation and navigation — that underscore a sea change beyond personal computing and the Internet, the technologies that have defined the last three decades of the computing world.

"During the next decade we're going to see smarts put into everything," said Ed Lazowska, a computer scientist at the University of Washington who is a specialist in Big Data. "Smart homes, smart cars, smart health, smart robots, smart science, smart crowds and smart computer-human interactions."

The enormous amount of data being generated by inexpensive sensors has been a significant factor in altering the center of gravity of the computing world, he said, making it possible to use centralized computers in data centers — referred to as the cloud — to take artificial intelligence technologies like machine-learning and spread computer intelligence far beyond desktop computers.

Apple was the most successful early innovator in popularizing what is today described as ubiquitous computing. The idea, first proposed by Mark Weiser, a computer scientist with Xerox, involves embedding powerful microprocessor chips in everyday objects.

Steve Jobs, during his second tenure at Apple, was quick to understand the implications of the falling cost of computer intelligence. Taking advantage of it, he first created a digital music player, the iPod, and then transformed mobile communication with the iPhone. Now such innovation is rapidly accelerating into all consumer products.

"The most important new computer maker in Silicon Valley isn't a computer maker at all, it's Tesla," the electric car manufacturer, said Paul Saffo, a managing director at Discern Analytics, a research firm based in San Francisco. "The car has become a node in the network and a computer in its own right. It's a primitive robot that wraps around you."

Here are several areas in which next-generation computing systems and more powerful software algorithms could transform the world in the next half-decade.

Artificial Intelligence

With increasing frequency, the voice on the other end of the line is a computer.

It has been two years since Watson, the artificial intelligence program created by I.B.M., beat two of the world's best "Jeopardy" players. Watson, which has access to roughly 200 million pages of information, is able to understand natural language queries and answer questions.

The computer maker had initially planned to test the system as an expert adviser to doctors; the idea was that Watson's encyclopedic knowledge of medical conditions could aid a human expert in diagnosing illnesses, as well as contributing computer expertise elsewhere in medicine.

In May, however, I.B.M. went a significant step farther by announcing a general-purpose version of its software, the "I.B.M. Watson Engagement Advisor." The idea is to make the company's question-answering system available in a wide range of call center, technical support and telephone sales applications. The company says that as many as 61 percent of all telephone support calls currently fail because human support-center employees are unable to give people correct or complete information.

Watson, I.B.M. says, will be used to help human operators, but the system can also be used in a "self-service" mode, in which customers can interact directly with the program by typing questions in a Web browser or by speaking to a speech recognition program.

That suggests a "Freakonomics" outcome: There is already evidence that call-center operations that were once outsourced to India and the Philippines have come back to the United States, not as jobs, but in the form of software running in data centers.

Robotics

A race is under way to build robots that can walk, open doors, climb ladders and generally replace humans in hazardous situations.

In December, the Defense Advanced Research Projects Agency, or Darpa, the Pentagon's advanced research arm, will hold the first of two events in a $2 million contest to build a robot that could take the place of rescue workers in hazardous environments, like the site of the damaged Fukushima Daiichi nuclear plant.

Scheduled to be held in Miami, the contest will involve robots that compete at tasks as diverse as driving vehicles, traversing rubble fields, using power tools, throwing switches and closing valves.

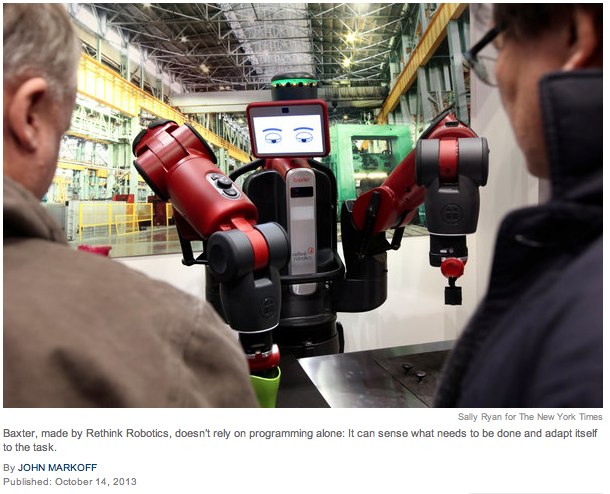

In addition to the Darpa robots, a wave of intelligent machines for the workplace is coming from Rethink Robots, based in Boston, and Universal Robots, based in Copenhagen, which have begun selling lower-cost two-armed robots to act as factory helpers. Neither company's robots have legs, or even wheels, yet. But they are the first commercially available robots that do not require cages, because they are able to watch and even feel their human co-workers, so as not to harm them.

For the home, companies are designing robots that are more sophisticated than today's vacuum-cleaner robots. Hoaloha Robotics, founded by the former Microsoft executive Tandy Trower, recently said it planned to build robots for elder care, an idea that, if successful, might make it possible for more of the aging population to live independently.

Seven entrants in the Darpa contest will be based on the imposing humanoid-shaped Atlas robot manufactured by Boston Dynamics, a research company based in Waltham, Massachusetts. Among the wide range of other entrants are some that look anything but humanoid — with a few that function like "transformers" from the world of cinema. The contest, to be held in the infield of the Homestead-Miami Speedway, may well have the flavor of the bar scene in "Star Wars."

Intelligent Transportation

Amnon Shashua, an Israeli computer scientist, has modified his Audi A7 by adding a camera and artificial-intelligence software, enabling the car to drive the 65 kilometers, or 40 miles, between Jerusalem and Tel Aviv without his having to touch the steering wheel.

In 2004, Darpa held the first of a series of "Grand Challenges" intended to spark interest in developing self-driving cars. The contests led to significant technology advances, including "Traffic Jam Assist" for slow-speed highway driving; "Super Cruise" for automated freeway driving, already demonstrated by General Motors and others; and self-parking, a feature already available from a number of car manufacturers.

Recently General Motors and Nissan have said they will introduce completely autonomous cars by the end of the decade. In a blend of artificial-intelligence software and robotics, Mobileye, a small Israeli manufacturer of camera technology for automotive safety that was founded by Mr. Shashua, has made considerable progress. While Google and automotive manufacturers have used a variety of sensors including radars, cameras and lasers, fusing the data to provide a detailed map of the rapidly changing world surround a moving car, Mobileye researchers are attempting to match that accuracy with just video cameras and specialized software.

Emotional Computing

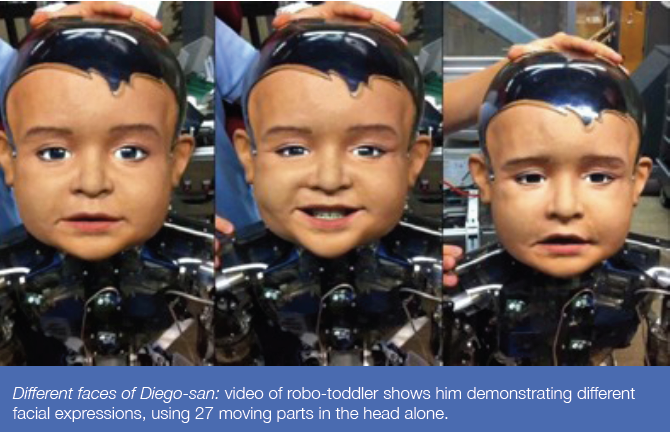

At a preschool near the University of California, San Diego, a child-size robot named Rubi plays with children. It listens to them, speaks to them and understands their facial expressions.

Rubi is an experimental project of Prof. Javier Movellan, a specialist in machine learning and robotics. Professor Movellan is one of a number of researchers now working on a class of computers that can interact with humans, including holding conversations.

Computers that understand our deepest emotions hold the promise of a world full of brilliant machines. They also raise the specter of an invasion of privacy on a scale not previously possible, as they move a step beyond recognizing human faces to the ability to watch the array of muscles in the face and decode the thousands of possible movements into an understanding of what people are thinking and feeling.

These developments are based on the work of the American psychologist Paul Ekman, who explored the relationship between human emotion and facial expression. His research found the existence of "micro expressions" that expose difficult-to-suppress authentic reactions. In San Diego, Professor Movellan has founded a company, Emotient, that is one of a handful of start-ups pursuing applications for the technology. A near-term use is in machines that can tell when people are laughing, crying or skeptical — a survey tool for film and television audiences.

Farther down the road, it is likely that applications will know exactly how people are reacting as the conversation progresses, a step well beyond Siri, Apple's voice recognition system.

Harry Potter fans, stand by.

10/10/2013 – Taking A Mindful Approach to the BRAIN Initiative

Taking A Mindful Approach to the BRAIN Initiative

By Christine Clark

ucsdnews

"As the epicenter of scientific innovation, California must take bold and prompt action to capitalize on the short- and long-term benefits of the BRAIN Initiative," said Senate Majority Leader Ellen M. Corbett (D-East Bay) at a Senate Select Committee on Emerging Technology: Biotechnology and Green Energy Jobs public hearing held Friday at UC San Diego.

The event, "A Mindful Approach to the BRAIN Initiative," was convened by Corbett. It explored the state's role in accelerating the research, development and deployment technologies to support the BRAIN (Brain Research through Advancing Innovative Neurotechnologies) Initiative, first unveiled by the Obama Administration in April 2013.

The research effort––in which UC San Diego, "Mesa" colleagues and private-public partners will play key roles––is designed to revolutionize understanding of how the brain works and uncover new ways to treat, prevent and cure brain disorders such as Alzheimer's, schizophrenia, autism, epilepsy and traumatic brain injury.

The discussions held Friday were attended by Chancellor Pradeep K. Khosla, who also was sitting in the front row at the White House when Obama made the BRAIN Initiative announcement on April 2.

|

"The president's initiative is charting the next frontier of science and UC San Diego is poised and ready to help our country lead the way," said Khosla. "Neuroscience, biology, and cognitive science are among the premier areas of strength on our campus, and we are really excited to be part of the effort to gain a deep understanding of human beings and how we behave." In response to Obama's "grand challenge," UC San Diego established the Center for Brain Activity Mapping (CBAM) in May. The new center, headed by Ralph Greenspan, is under the aegis of the interdisciplinary Kavli Institute for Brain and Mind at UC San Diego. CBAM tackles the technological and biological challenge of developing a new generation of tools to enable recording of neuronal activity throughout the brain. It will also conduct brain-mapping experiments and analyze the collected data. "This is another example of how California is leading the way, both in terms of understanding the human mind and how we can cure Alzheimer's, dementia and other diseases, and also in creating technologies, new innovations and jobs," said Khosla. At the hearing, Corbett, who is chair of the Select Committee, said she intends to introduce legislation early next year that supports cutting-edge research like the BRAIN Initiative that can bring societal and economic benefits to California. |

|---|

"Twenty-five years ago, the Human Genome Project led to the 'genomic revolution' and advanced some of the leading industries in our state," she said, "The BRAIN Initiative is the next logical step." At the hearing, representatives from UC San Diego were joined by other academic and industry leaders in voicing strong support of the initiative. Those testifying included Greenspan, founding director of CBAM and associate director of the Kavli Institute for Brain and Mind at UC San Diego (KIBM); Terry Sejnowski of the Salk Institute for Biological Studies and UC San Diego and director of the campus's Institute for Neural Computation; and Ramesh Rao, director of the Qualcomm Institute, the UC San Diego division of Calit2. |

|

|---|

"The last century we went to the moon to explore outer space; this century we're exploring inner space by studying the link between brain activity and behavior," said Sejnowski. "We need to find what it is that excites young people. We need to attract bright young minds the way President John F. Kenney did … In 1969, the year we went to the moon, the average age of a NASA engineer was 27."

When asked by Corbett if the state of California was doing enough to support the education needed by the BRAIN initiative, Greenspan answered by saying that more science should be integrated into the general education curriculum. "It's is important to build STEM programs and make it accessible to students … Students need to see themselves as future scientists."

Corbett concluded the conversation by saying that she thought the discussions helped dispel the notion that people come to California only for the weather. "You come here for the education and innovation," she said.

10/01/2013 – INC researcher, John Iversen, is elected to SMPC's board

INC researcher elected to the board of the Society for Music Perception and Cognition

Welcome to The Society for Music Perception and Cognition (SMPC), a scholarly organization dedicated to the study of music cognition. Use our website to learn about this rapidly growing field, including information on researchers, conferences, and student opportunities. Join us as we explore one of the most fascinating aspects of being human.

09/22/2013 – Hot on the trail of Parkinson's

Hot on the trail of Parkinson's

Sep 22th, 2013

By Gary Robbins

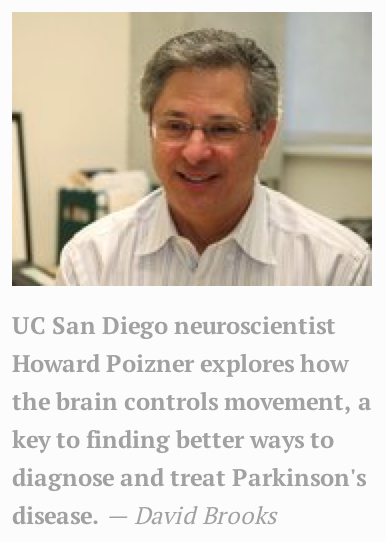

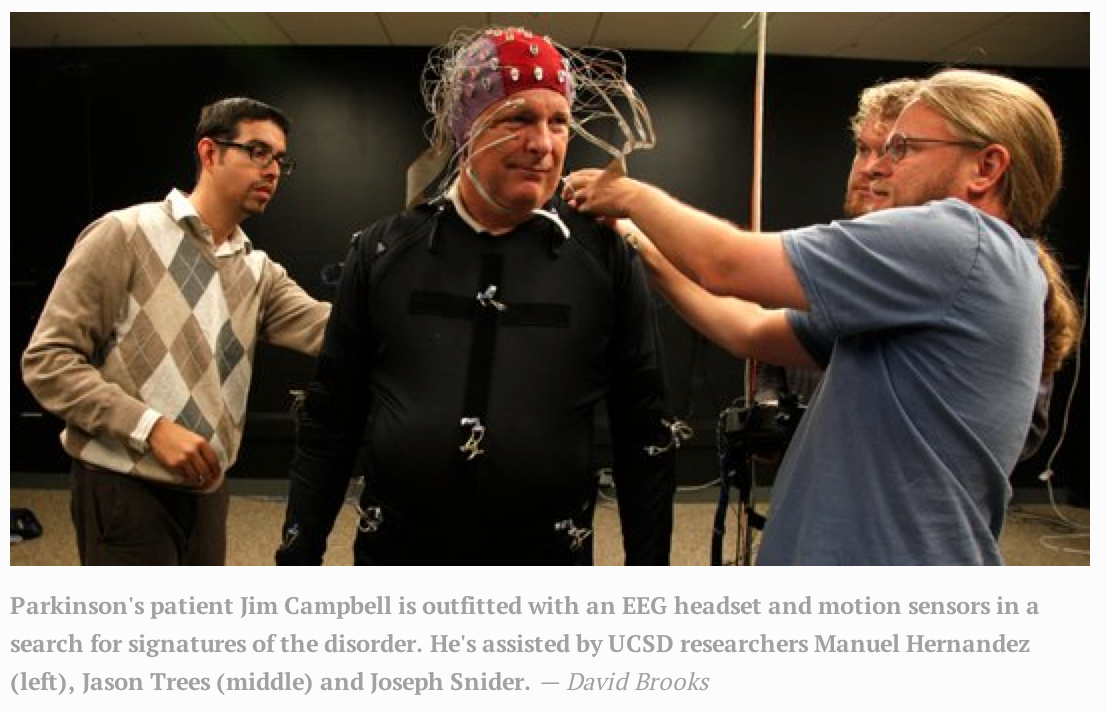

Michael J. Fox will return full time to network television September 26th, starring in an NBC sitcom that will give many viewers their first long look at Parkinson's disease, a neurodegenerative disorder that can affect a person's speech, movement and balance. Fox, 52, was diagnosed with Parkinson's about 20 years ago. He's since become a leading advocate for expanding research on a disease that afflicts 1 million Americans — including singer Linda Ronstadt, who recently announced that she can no longer sing because of the illness. UC San Diego has a large Parkinson's research program, part of it led by Howard Poizner, a neuroscientist who uses electroencephalography, or EEG, to measure the brain's electrical activity along the scalp. |

|---|

It can be an effective tool for studying how the brain controls movement, work that's critical to finding better ways to diagnose and treat Parkinson's. Poizner recently discussed his work with U-T San Diego, and here is an edited version of that conversation: Q: Astronomers have telescopes that can peer billions of years back in time. Why can't scientists see the short distance through the skull into areas where Parkinson's slowly unfolds? A: We can see into the skull, but we're looking at very weak signals. The signals that we see at the scalp are roughly a million times weaker than an AA battery. And the skull distorts the picture, making it harder for us to see what's going on. We're dealing with complexity, too. There are places in the cortex where you'll find 100,000 neurons making more than 1 trillion connections in an area about the size of the head of a pin. Signaling can occur hundreds of times a second across a vast communications network. |

|

|---|

|

Q: It sounds like science has a small number of tools that give a crude look at what's happening inside the most complicated network known to humans. Is that the case? A: There are tools that allow you to precisely examine what's happening inside the brains of animals. But things are very limited when it comes to looking at the living human brain, and we want to be noninvasive. Yet progress is being made across many areas, from the way we use EEG devices to improvements that are coming with magnetic resonance imaging (MRI). They'll give us a clearer look at brain activity, which we need. We've got to be able to separate abnormalities that are specific to Parkinson's from those that come with related diseases. Q: You personally make heavy use of EEGs to record brain activity. Why? |

|---|

| A: The EEG can be used in conjunction with other tools to help us get a better real-time look at Parkinson's, which is a chronic, progressive movement disorder. The disease can make it difficult for people to stand up, or to move their feet, or make smooth movements. And it can impair their ability to take corrective action, like grabbing a bottle of water that's been knocked over. To look at this, we put motion sensors on a patient's hands, arms, legs and other parts of their body, which helps us analyze the fine details of their movements. At the same time, we're using the EEG headset to map what's happening in their brain. We then can relate their ongoing brain activity to moment-by-moment changes in their movements. Hopefully, we'll be able to extract signatures of brain activity that are characteristic of Parkinson's in its early stages. We really need that. There's no blood test for this disease. |

|

|---|

|

Q: On his new show, Michael J. Fox moves around a lot — and he moves quickly. You're keying in more on movement, and is that a change in what researchers do? A: You raise a very important point. Traditionally, monitoring brain activity has required people to be sitting still or lying down with their heads restrained. This has been done to prevent muscle activity from interfering with the brain recordings. But that's been changing due to advances in hardware and advances in the way EEG signals are analyzed. And we can record these signals wirelessly. We can now record people's brain activity while they're actually moving around or reacting to things that they see while wearing a virtual reality headset. This is critically important; it allows us to study how the brain acts in real-world situations. Q: What kinds of things are you looking at? A: I do basic research into how the brain controls movement, which is closely tied to Parkinson's. The disease affects the circuitry of the brain. It alters the timing and sequence and rhythm of the brain's communication networks. These circuits can get locked in particular rhythms, which makes it harder for people to react to stimuli. |

|---|

Q: Fox is a beloved figure who will be making light of his own struggles with Parkinson's in his sitcom. Is this show likely to teach people a lot about a disease that few understand, or will viewers turn away because it is hard to watch a person with a movement disorder? A: My gut says that people will watch the show. They relate to Michael J. Fox. They know him, in a sense. He's engaging, funny. I think they'll react to the fact that he's finding happiness and hope while living with a devastating disease. He's bringing this message into their homes through TV, and they need to hear that there's hope. We're seeing others — people like Linda Ronstadt — living with Parkinson's. We don't yet know the precise causes of this disease. But there are a lot of excellent therapies that can help a lot of people, and these are exciting times in the world of research. This is a disease that will be cured. It won't happen, say, in the next five years. But it's going to happen. Poizner's research is funded by the National Institutes of Health, the Office of Naval Research and the National Science Foundation. |

|---|

09/17/2013 – INC Co-Director selected by the NSF to take part in a five-year, multi-institutional, $10 million research project

Bioengineers Researching Smart Cameras and Sensors that Mimic, Exceed Human Capability

Sep 17th, 2013

By Catherine Hockmuth,

|

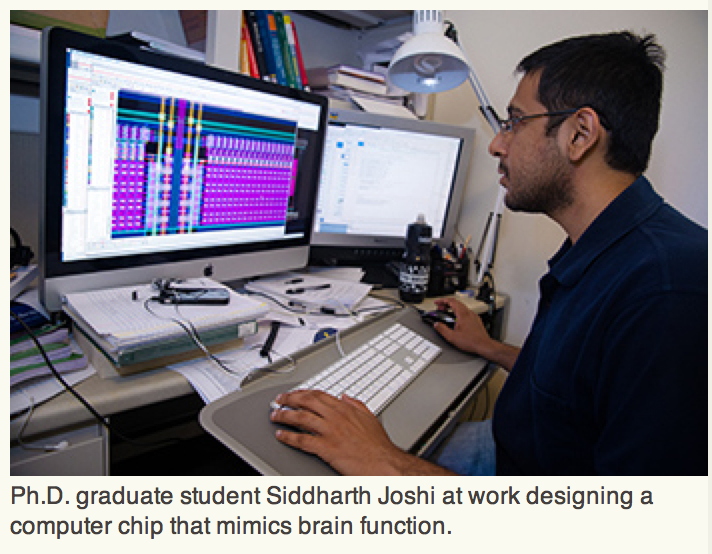

University of California, San Diego bioengineering professor Gert Cauwenberghs has been selected by the National Science Foundation to take part in a five-year, multi-institutional, $10 million research project to develop a computer vision system that will approach or exceed the capabilities and efficiencies of human vision. The Visual Cortex on Silicon project, funded through NSF's Expeditions in Computing program, aims to create computers that not only record images but also understand visual content and situational context in the way humans do, at up to a thousand times the efficiency of current technologies, according to an NSF announcement. Smart machine vision systems that understand and interact with their environments could have a profound impact on society, including aids for visually impaired persons, driver assistance capabilities for reducing automotive accidents, and augmented reality systems for enhanced shopping, travel, and safety. For their part in the effort, Cauwenberghs, a professor in the Department of Bioengineering at the UC San Diego Jacobs School of Engineering, and his team are developing computer chips that emulate how the brain processes visual information. "The brain is the gold standard for computing," said Cauwenberghs, adding that computers work completely differently than the brain, acting as passive processors of information and problems using sequential logic. The human brain, by comparison, processes information by sorting through complex input from the world and extracting knowledge without direction. While several computer vision systems today can each successfully perform one or a few human tasks-such as detecting human faces in point-and-shoot cameras-they are still limited in their ability to perform a wide range of visual tasks, to operate in complex, cluttered environments, and to provide reasoning for their decisions. In contrast, the visual cortex in mammals excels in a broad variety of goal-oriented cognitive tasks, and is at least three orders of magnitude more energy efficient than customized state-of-the-art machine vision systems. |

|---|

|

Cauwenberghs said the Visual Cortex on Silicon project offers a unique collaborative opportunity with experts across the globe in neuroscience, computer science, nanoengineering and physics. The project has other far-reaching implications for neuroscience research. By developing chips that can function more like the human brain, Cauwenberghs believes researchers can achieve a number of significant breakthroughs in our understanding of brain function from the work of single neurons all the way up to a more holistic view of the brain as a system. For example, building chips that model different aspects of brain function, such as how the brain processes visual information, gives researchers a more robust tool to understand where problems arise that contribute to disease or neurological disorders. |

|

|---|---|---|

| The Expeditions in Computing program, which started in 2008, represents NSF's largest single investments in computer science research. As of today, 16 awards have been made through this program, addressing subjects ranging from foundational research in computing hardware, software and verification to research in sustainable energy, health information technology, robotics, mobile computing, and Big Data. | ||

08/05/2013 – Accelerating Brain Research with Supercomputers (video)

Accelerating Brain Research with Supercomputers

August 8th, 2013

by Aaron Dubrow,

Texas Advanced Computing Center

The brain is the most complex device in the known universe. With 100 billion neurons connected by a quadrillion synapses, it's like the world's most powerful supercomputer on steroids. To top it all off, it runs on only 20 watts of power… about as much as the light in your refrigerator.

These were a few of the introductory ideas discussed by Terrence Sejnowski, Director of the Computational Neurobiology Laboratory at the Salk Institute for Biological Studies, a co-director of the Institute for Neural Computation at UC San Diego, an investigator with the Howard Hughes Medical Institute and a member of the advisory committee to the director of National Institutes of Health (NIH) for the BRAIN (Brain Research through Application of Innovative Neurotechnologies) Initiative, which was launched in April 2013.

"I was in the White House when the program was announced," Sejnowski recalled. "It was very exciting. The President was telling me that my life's work was going to be a national priority over the next 15 years."

At that event, the NIH, the National Science Foundation, and the Defense Advanced Research Projects Agency announced their commitment to dedicate about $110 million for the first year to develop innovative tools and techniques that will advance brain studies, which will ramp up as the Initiative gains ground.

In a recent talk in San Diego at the XSEDE13 conference — the annual meeting of researchers, staff and industry who use and support the U.S. cyberinfrastructure — Sejnowski described the rapid progress that neuroscience has made over the last decade and the challenges ahead. High-performance computing, visualization and data management and analysis will play critical roles in the next phase of the neuroscientific revolution, he said.

A deeper understanding of the brain would advance our grasp of the processes that underlie mental function. Ultimately it may also help doctors comprehend and diagnose mental illness and degenerative diseases of the brain and possibly even intervene to prevent these diseases in the future.

"Not only can we understand what happens when the brain is functioning normally, maybe we can understand what's happening when it's not functioning right, as in mental disorders," he said.

Currently, this dream is a long way off. Brain activity occurs at all scales from the atomic to the macroscopic level, and each behavior contributes to the working of the brain. Sejnowski explained the challenge of understanding even a single aspect of the brain by showing a series of visualizations that illustrated just how interwoven and complex the various components of the brain are.

One video [pictured below] examined how the axons, dendrites and other components fit together in a small piece of the brain, called the neuropil. He likened the structure to "spaghetti architecture." A second video showed what looked like fireworks flashing across many regions of the brain and represented the complex choreography by which electrical signals travel in the brain.

Despite the rapid rate of innovation, the field is still years away from obtaining a full picture of a mouse's or even a worm's brain. It would require an accelerated rate of growth to reach the targets that neuroscientists have set for themselves. For that reason, the BRAIN Initiative is focusing on new technologies and tools that could have a transformative impact on the field.

"If we could record data from every neuron in a circuit responsible for a behavior, we could understand the algorithms that the brain uses," Sejnowski said. "That could help us right now."

Larger, more comprehensive and capable supercomputers, as well as compatible tools and technologies, are needed to deal with the increasing complexity of the numerical models and the unwieldy datasets gleaned by fMRI or other imaging modalities. Other tools and techniques that Sejnowski believes will be required include industrial-scale electron microscopy; improvements in optogenetics; image segmentation via machine learning; developments in computational geometry; and crowd sourcing to overcome the "Big Data" bottleneck.

"Terry's talk was very inspiring for the XSEDE13 attendees and the entire XSEDE community," said Amit Majumdar, technical program chair of XSEDE13. Majumdar directs the scientific computing application group at the San Diego Supercomputer Center (SDSC) and is affiliated with the Department of Radiation Medicine and Applied Sciences at UC San Diego. "With XSEDE being the leader in research cyberinfrastructure, it was great to hear that tools and technologies to access supercomputers and data resources are a big part of the BRAIN Initiative."

For his part, over the past decade Sejnowski led a team of researchers to create two software environments for brain simulations, called MCell (or Monte Carlo Cell) and Cellblender. MCell combines spatially realistic 3D models of the geometry of the brain (as determined by brain scans and computational analysis), and simulates the movements and reactions of molecules within and between brain cells—for instance, by populating the brain's 3D geometry with active ion channels, which are responsible for the chemical behavior of the brain. Cellblender visualizes the output of MCell to help computational biologists better understand their results.

Researchers at the Pittsburgh Supercomputing Center, the University of Pittsburgh, and the Salk Institute developed these software packages collaboratively with support from the National Institutes of Health, the Howard Hughes Medical Institute, and the National Science Foundation. The open-source software runs on several of the XSEDE-allocated supercomputers and has generated hundreds of publications.

MCell and Cellblender are a step in the right direction, but they will be stretched to their limits when dealing with massive datasets from new and emerging imaging tools. "We need better algorithms and more computer systems to explore the data and to model it," Sejnowski said. "This is where the insights will come from — not from the sheer bulk of data, but from what the data is telling us."

Supercomputers alone will not be enough either, he said. An ambitious, long-term project of this magnitude requires a small army of students and young professional to progress.

Sejnowski likened the announcement of the BRAIN Initiative to the famous speech where John F. Kennedy vowed to send an American to the moon. When Neil Armstrong landed on the moon eight years later, the average age of the NASA engineers that sent him there was 26-years-old. Encouraged by JFK's passion for space travel and galvanized by competition from the Soviet Union, talented young scientists joined NASA in droves. Sejnowski hopes the same will be true for the neuroscience and computational science fields.

"This is an idea whose time has come," he said. "The tools and techniques are maturing at just the right time and all we need is to be given enough resources so we can scale up our research."

The annual XSEDE conference, organized by the National Science Foundation's Extreme Science and Engineering Discovery Environment (xsede.org) with the support of corporate and non-profit sponsors, brings together the extended community of individuals interested in advancing research cyberinfrastructure and integrated digital services for the benefit of science and society. XSEDE13 was held July 22-25 in San Diego; XSEDE14 will be held July 13-18 in Atlanta. For more information, visit https://conferences.xsede.org/xsede14

07/17/2013 – SCCN researchers receive the "Best Paper Award" at EMBC'2013

35th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC'13)

July 17th, 2013

SCCN researchers receive the "Best Paper Award" at the International Neurotechnology Consortium Workshop at the 2013 International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC'13).

Mullen, T., Kothe, C., Chi, M., Ojeda, A., Kerth, T., Makeig, S., Cauwenberghs, G., Jung, T-P. Real-Time Modeling and 3D Visualization of Source Dynamics and Connectivity Using Wearable EEG.

06/17/2013 – SCCN researcher awarded the International BCI (Brain-Computer Interface 2013) Meeting's Poster Prize for Technical Merit.

International BCI (Brain-Computer Interface 2013) Meeting

June 17th, 2013

SCCN's researcher awarded the International BCI (Brain-Computer Interface 2013) Meeting's Poster Prize for Technical Merit:

Tim Mullen, UC San Diego for Real-Time Estimation and 3D Visualization of

Source Dynamics and Connectivity Using Wearable EEG

http://dx.doi.org/10.3217/978-3-85125-260-6-106 (PDF)

See more BCI 2013 coverage here...

05/16/2013 – UC San Diego Creates Center for Brain Activity Mapping (CBAM)

UC San Diego Creates Center for Brain Activity Mapping (CBAM)

May 16, 2013

By Inga Kiderra

see source article here...

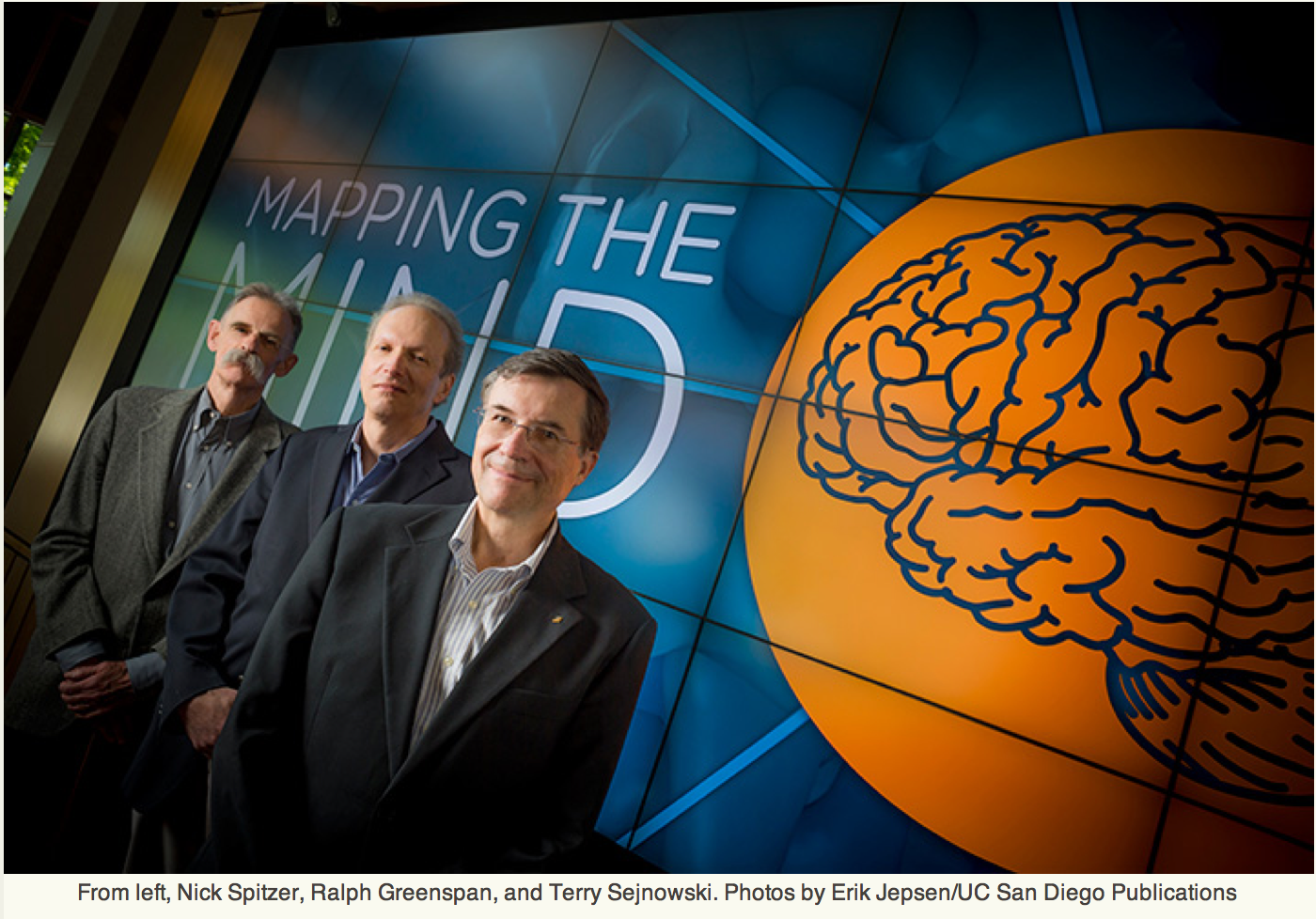

Responding to President Barack Obama's "grand challenge" to chart the function of the human brain in unprecedented detail, the University of California, San Diego has established the Center for Brain Activity Mapping (CBAM). The new center, under the aegis of the interdisciplinary Kavli Institute for Brain and Mind at UC San Diego, will tackle the technological and biological challenge of developing a new generation of tools to enable recording of neuronal activity throughout the brain. It will also conduct brain-mapping experiments and analyze the collected data.

|

|---|

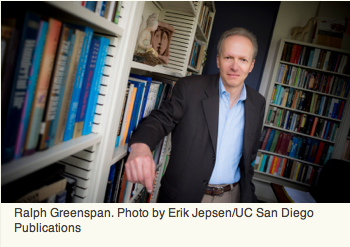

Ralph Greenspan–one of the original architects of a visionary proposal that eventually led to the national BRAIN Initiative launched by President Obama in April–has been named CBAM's founding director.

UC San Diego Chancellor Pradeep K. Khosla, who attended Obama's unveiling of the BRAIN Initiative, said: "I am pleased to announce the launch of the Center for Brain Activity Mapping. This new center will require the type of in-depth and impactful research that we are so good at producing at UC San Diego. We have strengths here on our campus and the Torrey Pines Mesa, both in breadth of talent and in the scientific openness to collaborate across disciplines, that few others can offer the project."

Greenspan, who also serves as associate director of the Kavli Institute for Brain and Mind at UC San Diego, said CBAM will focus on developing new technologies necessary for global brain-mapping at the resolution level of single cells and the timescale of a millisecond, participate in brain mapping experiments, and develop the necessary support mechanisms for handling and analyzing the enormous datasets that such efforts will produce.

Brain-mapping discoveries made by CBAM may shed light on such brain disorders as autism, traumatic brain injury and Alzheimer's–and could potentially point the way to new treatments, Greenspan said. The technologies developed and advances in understanding brain networks will also likely have industrial applications outside of medicine, he said.

The new center will bring together researchers from neuroscience (including cognitive science, psychology, neurology and psychiatry), engineering, nanoscience, radiology, chemistry, physics, computer science and mathematics.

"An essential component of the center will be its close relationships with other San Diego research institutions and with industrial partners in the region's hi-tech and biotech clusters," said Nick Spitzer, distinguished professor of neurobiology and director of the Kavli Institute for Brain and Mind at UC San Diego.

Beyond bringing researchers together, the center will seek the resources to support specific projects. Some of these projects will likely build on existing research at UC San Diego while others will be brand new, growing out of the novel collaborations that CBAM will encourage and nurture.

The center aims to compete for national grant funds but will also seek to pursue projects with the help of philanthropists and industry partners.

Administratively, CBAM will be part of the interdisciplinary Kavli Institute for Brain and Mind. The Qualcomm Institute at UC San Diego (formerly known as Calit2) will support CBAM with some initial space for collaborative projects.

Greenspan will soon assemble a director's council, to help guide the center's scientific program, and an advisory board, to assist on general strategy and fundraising.

Greenspan authored the proposal for CBAM with Spitzer and Terry Sejnowski, director of UC San Diego's Institute for Neural Computation, who holds joint appointments with UC San Diego and The Salk Institute.

The trio identified the center's immediate goal as preparing CBAM to compete effectively for federal BRAIN initiative funding. Activities will include, for example, topic-oriented meetings and workshops to identify potential project areas.

Medium-term goals include providing seed-grant support for specific projects, building strong ties among scientists from the different relevant disciplines, and creating an outreach program. The center will also seek dedicated space on campus.

In the long term, CBAM hopes to create an endowment for stable support of the most promising projects and to facilitate the formation of new start-up companies.

"We have the capability and the atmosphere here to make some major advances on the BRAIN Initiative," Greenspan said. "We are among the best-positioned places anywhere to make a significant contribution to the president's challenge.

"We invite members of the scientific and philanthropic communities – here in San Diego and further afield," he said, "to join with us on this vital quest."

04/25/2013 – UC San Diego's "Simphony" Research Earns Grammy Foundation Support

UC San Diego's "Simphony" Research Earns Grammy Foundation Support

April 25, 2013

|

A UC San Diego study of the impact of music training on the brain and behavioral development in children has been awarded a grant of nearly $20,000 by the Grammy Foundation. "SIMPHONY," the grant award says, "is a unique collaboration designed to understand how music training affects children's brains and the general cognitive skills like language and attention. It is the first study of its kind, and will track 60 children annually starting at ages 5-10 as they engage in ensemble music training using an extensive battery of neural and behavioral testing." SIMPHONY, short for Studying the Impact Music Practice Has On Neurodevelopment in Youth, is a collaboration among researchers at UC San Diego's Center for Human Development and the Institute for Neural Computation. "We're grateful for the Grammy Foundation's support of this important research," said John Iversen, who directs the five-year longitudinal study in close collaboration with Terry Jernigan, director of the Center for Human Development.

|

|---|

"Our partnership with the San Diego Youth Symphony's Community Opus program, which brings intensive music training to elementary schools in Chula Vista, will give us valuable insights into the effect of musical training on developing brains, as well as better understanding of the links between brain structure and behavior." The study includes not only a non-music-learning control group, said Iversen, but also another control group of students studying martial arts, which can help pinpoint any benefits due to musical training as opposed to more general enrichment activities. The Grammy Foundation award of nearly $20,000 will enable the project to retain an older cohort of music students within the study, and will support their testing for two additional years. Jernigan, the project's lead neuro-imaging researcher, is a leader in the field of child neuro-development; Iversen and co-investigator Aniruddh Patel (now at Tufts University) are noted for their research into music cognition and the cognitive relationship between music and language. The Grammy Foundation Grant Program, funded by the Recording Academy, provides annual funding to organizations and individuals to advance the archiving and preservation of the recorded sound heritage of the Americas, as well as research projects related to the impact of music on the human condition. Organized Research Units – including the Institute for Neural Computation and the Center for Human Development – make up a significant portion of the university's billion-dollar research enterprise, and contribute to UC San Diego's pioneering interdisciplinary and multidisciplinary leadership. |

|

|---|

04/07/2013 – Neuroscientist Terry Sejnowksi attends White House announcement collaborative BRAIN Initiative

Salk applauds Obama's ambitious BRAIN Initiative to research human mind

Neuroscientist Terry Sejnowksi attends White House announcement collaborative BRAIN Initiative

April 02, 2013

LA JOLLA, CA—Salk neuroscientist Terrence J. Sejnowski joined President Barack Obama in Washington, D.C., on April 2, 2013, at the launch of the Brain Research through Advancing Innovative Neurotechnologies (BRAIN) Initiative—a major Administration neuroscience effort that advances and builds upon collaborative scientific work by leading brain researchers such as Salk's own Sejnowski.

"We have the chance to improve the lives of not just millions, but billions of people on this planet," said the president, "It will require us to embrace the spirit of discovery that made America—America."

|

|---|

| Terrence J. Sejnowski Professor and Head of Computational Neurobiology Laboratory, Howard Hughes Medical Institute Investigator, Francis Crick Chair Courtesy of the Salk Institute for Biological Studies |

In his introductory remarks, National Institutes of Health Director Francis Collins, dubbed Obama the "Scientist in Chief," and said, "Asking the people in this room to delay innovation would be like asking the cherry trees to stop blooming."

Obama compared the BRAIN Initiative to the Human Genome Project, which mapped the entire human genome and ushered in a new era of genetics-based medicine. "Every dollar spent on the human genome has returned $140.00 to our economy," the president said. Instead of charting genes, BRAIN will help visualize the brain activity directly involved in such vital functions as seeing, hearing and storing memories, a crucial step in understanding how to treat diseases and injuries of the nervous system.

The BRAIN Initiative is launching with approximately $100 million in funding for research supported by the National Institutes of Health (NIH), the Defense Advanced Research Projects Agency (DARPA), and the National Science Foundation (NSF) in the President's Fiscal Year 2014 budget.

Foundations and private research institutions are also investing in the neuroscience that will advance the BRAIN Initiative. Along with the Salk Institute, they include The Allen Institute for Brain Science, the Kavli Foundation, and The Howard Hughes Medical Institute.

"This initiative is a boost for the brain like the Human Genome Project was for the genes," says Sejnowski, the Francis Crick Chair and head of the Computational Neurobiology Laboratory at Salk. "This is the start of the million neuron march."

The BRAIN initiative and its focus on leveraging emerging technologies dovetails with the Salk Institute's Dynamic Brain Initiative, a neuroscience initiative focused on providing a better understanding of the brain, spinal cord and peripheral nervous system. The Salk Institute itself is home to several pioneering tool builders, among them Edward M. Callaway, already famous among systems neuroscientists for using a modified rabies virus to trace neuronal connections in the visual system.

"Scientists have known since the time of Galileo that new tools can open up whole new lines of research," says Callaway, holder of the Audrey Geisel Chair in Biomedical Science. "But for us, tools aren't just mechanical instruments, they can be viruses, genes, chemical dyes, or even photons."

Tools are also mathematical, explains Sejnowski. "When you are trying to understand the electrical and chemical interactions of millions of brain cells, you are looking at a multi-dimensional problem, which can only be solved by computational modeling," he says. "My lab has as many mathematicians and physicists and engineers as it has biologists."

Summing up his excitement over the promise of BRAIN, Sejnowski says, "Imagine how it must have felt to be a rocket engineer when Kennedy said we would reach for the moon. You know there's an almost unimaginable amount of hard work ahead of you—and yet you can't wait to get started."

The initiative builds on discussions between a group of leading neuroscientists and nanotechnologists from around the country, including Sejnowski. The scientists published an article on the topic in the March 15 issue of Science, in which they noted that the Human Genome Project yielded $800 billion in economic impact from a $3.8 billion investment—and that a similar neuroscience initiative could expect to produce similar returns.

President Obama emphasized the impact of the genome-mapping project in his February 2013 State of the Union address and the importance of neuroscience for addressing human diseases. "Today, our scientists are mapping the human brain to unlock the answers to Alzheimer's," he said. "Now is the time to reach a level of research and development not seen since the height of the Space Race."

Sejnowski says BRAIN could ultimately help reduce the overwhelming costs for treatment and long-term care of brain-related disorders, which Price Waterhouse Coopers estimated at $515 billion for the United States alone in 2012.

"Many of the most devastating human brain disorders, such as depression and schizophrenia, only seem to emerge when large-scale assemblies of neurons are involved," says Sejnowski. "Other terrible conditions, such as blindness and paralysis, result from disruptions in circuit connections. The more precise our information about specific circuits, the more we will understand what went wrong, where it went wrong, and how to target therapies."

Computational neuroscience, a field Sejnowski helped establish, will be a central avenue of research advanced under the new Initiative. One of only ten living individuals to have been elected to three branches of the National Academies—National Academy of Sciences, National Academy of Engineering and Institute of Medicine—Sejnowski co-authored 23 Problems in Systems Neuroscience, a foundational book that lays out many of the questions BRAIN is aiming to answer.

Computational neuroscience focuses on understanding how a circuit of hundreds to thousands of brain cells, which includes neurons, as well as associated cells, such as astrocytes, allows us to do something as simple as reaching out a hand or as complex as processing rich visual information. The only way to fully understand systems, such as olfaction or vision, is to map and probe the entire circuit, which is exactly what the BRAIN proposes to do.

"We're not jumping in and mapping the entire active human brain," says Sejnowski, "But we are at a point where we can develop the tools to map entire circuits, first in invertebrates and eventually in mammals."

In fact, part of the reason that the neuroscience field is now gaining momentum is that advances in engineering and physics are allowing scientists to develop incredibly tiny tools to explore the molecular world of living cells. It is no accident, says Sejnowski, that the Science paper included a cadre of nanotechnology pioneers as coauthors. "It's like wishing for a faster car, and finding out that engineers from Bugatti and Lotus are offering to help," Sejnowski says of the cross-disciplinary collaboration.

New tools that will be developed under BRAIN will push the cutting-edge even further, enabling scientists to look at the brain with better spatial and temporal resolution, as well as analyze the millions of bits of accumulated data.

About the Salk Institute for Biological Studies:

The Salk Institute for Biological Studies is one of the world's preeminent basic research institutions, where internationally renowned faculty probe fundamental life science questions in a unique, collaborative, and creative environment. Focused both on discovery and on mentoring future generations of researchers, Salk scientists make groundbreaking contributions to our understanding of cancer, aging, Alzheimer's, diabetes and infectious diseases by studying neuroscience, genetics, cell and plant biology, and related disciplines.

Faculty achievements have been recognized with numerous honors, including Nobel Prizes and memberships in the National Academy of Sciences. Founded in 1960 by polio vaccine pioneer Jonas Salk, M.D., the Institute is an independent nonprofit organization and architectural landmark.

04/04/2013 – President Obama announces BRAIN Initiative

Mapping the Mind

President Obama announces BRAIN Initiative in which UC San Diego, 'Mesa' colleagues and private-public partners will play key roles

April 4th, 2013

By Inga Kiderra

President Barack Obama is introduced by Dr. Francis Collins, Director, National Institutes of Health, at the BRAIN Initiative event in the East Room of the White House, April 2, 2013. (Official White House Photo by Chuck Kennedy) The President of the United States gathered together on April 2 "some of the smartest people in the country, some of the most imaginative and effective researchers in the country," he said, to hear him announce a broad and collaborative research initiative designed to revolutionize our understanding of the brain.

The BRAIN Initiative, short for Brain Research through Advancing Innovative Neurotechnologies, is launching with approximately $100 million in proposed funding in the president's Fiscal Year 2014 budget. It aims to advance the science and technologies needed to map and decipher brain activity.

Sitting in the front row for the announcement were three University of California chancellors, including UC San Diego's Pradeep K. Khosla.

Chancellor Khosla was accompanied at the White House by Ralph Greenspan, associate director of the Kavli Institute for Brain and Mind at UC San Diego (KIBM); Terry Sejnowski of the Salk Institute for Biological Studies and UC San Diego, director of campus's Institute for Neural Computation; KIBM Director Nick Spitzer, distinguished professor of neurobiology in the Division of Biological Sciences, and Dr. Dilip V. Jeste, Estelle and Edgar Levi Chair in Aging, distinguished professor of psychiatry and neurosciences at UC San Diego School of Medicine, and director of the Stein Institute.

"As humans, we can identify galaxies light years away, we can study particles smaller than an atom," President Barack Obama said. "But we still haven't unlocked the mystery of the three pounds of matter that sits between our ears."

The human brain, Obama pointed out, has some 100 billion neurons and trillions of connections between them. Right now, he said, borrowing a musical metaphor from National Institutes of Health Director Francis Collins, we can make out just the string section of the orchestra. The BRAIN Initiative aims to make it possible to hear the entire symphony.