EEGLAB: The Gift That Keeps On Giving

When it comes to studying the brain, one of the most powerful techniques is to eavesdrop on the electric signals darting between brain cells. The first instance of recording brain activity from a human was reported in 1929 by German psychiatrist Hans Berger who used electroencephalography (EEG), a minimally invasive method of picking up small electrical potentials from atop the scalp. It wasn’t long before this recording method became a clinical tool to help diagnose epilepsy. Beyond the clinical setting, EEG became and still exists as one of the main methods to study how the human brain works. And that is why, in the late 1990’s and early 2000’s, scientists at UCSD built an open source tool to process and analyze EEG data. EEGLAB, as it is called, continues to evolve to meet the needs of scientists around the world.

When it comes to studying the brain, one of the most powerful techniques is to eavesdrop on the electric signals darting between brain cells. The first instance of recording brain activity from a human was reported in 1929 by German psychiatrist Hans Berger who used electroencephalography (EEG), a minimally invasive method of picking up small electrical potentials from atop the scalp. It wasn’t long before this recording method became a clinical tool to help diagnose epilepsy. Beyond the clinical setting, EEG became and still exists as one of the main methods to study how the human brain works. And that is why, in the late 1990’s and early 2000’s, scientists at UCSD built an open source tool to process and analyze EEG data. EEGLAB, as it is called, continues to evolve to meet the needs of scientists around the world.

The beginnings of the EEGLAB project stemmed from a desire to share resources within the EEG scientific community. Scott Makeig attended a meeting in Carmel in 1998 where he proposed an idea to make an open repository of EEG data. He took a poll of the room, filled with EEG researchers, to see if they would contribute their data to the repository. As Makeig puts it, “The response was tepid at best.” So he put forth another idea, an open source software based on the analyses that Makeig and others were using to make sense of their data. This time, the response was more than tepid, it was enthusiastic. Makeig recalls, “Hands shot up all around. I knew then that our software sharing effort that later became EEGLAB might have a strong future.”

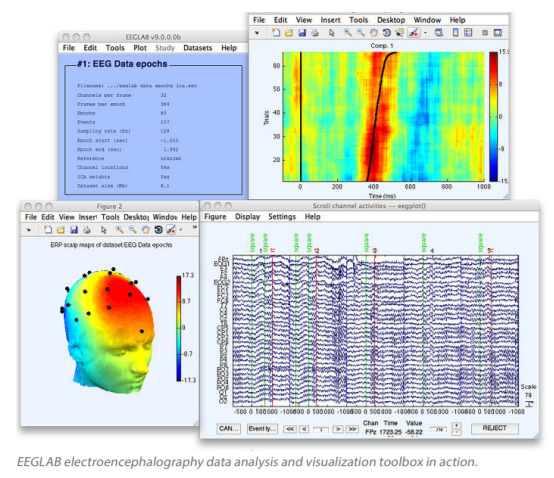

EEGLAB filled a need for data analysis that only very expensive, proprietary, and thus non-transparent software, could provide. EEG data is notoriously difficult to analyze. The first hurdle is removing artifacts, or signals that don’t originate from the brain. An artifact could be the twitch of a facial muscle or electrical interference from a nearby machine. This problem seems easy in comparison to the next concern - how to separate and spatially pinpoint the origin of brain signals that are mixing together as they are picked up by electrodes distributed across the scalp.

EEGLAB filled a need for data analysis that only very expensive, proprietary, and thus non-transparent software, could provide. EEG data is notoriously difficult to analyze. The first hurdle is removing artifacts, or signals that don’t originate from the brain. An artifact could be the twitch of a facial muscle or electrical interference from a nearby machine. This problem seems easy in comparison to the next concern - how to separate and spatially pinpoint the origin of brain signals that are mixing together as they are picked up by electrodes distributed across the scalp.

You can think about this problem as the difficulty you might face when you are a parent and all of your kids are talking to you at the same time. The sounds mix together into one cacophonous audio stream that is difficult to decipher. But, given the right kind of algorithm, you can separate the sound that reaches your ears so that you can listen to what each kid said individually without the interruption of the others. That is the power of a technique called ICA, or independent components analysis. It allows scientists to uncouple and localize the fundamental brain signals contributing to an EEG signal.

Artifact removal and ICA are just two of the tools that make EEGLAB so powerful. It’s these tools that University of Minnesota researcher, Scott Burwell, says makes EEGLAB indispensable. He uses EEGLAB to see if brain responses picked up by EEGs can predict differences in performance on cognitive tasks between adolescents diagnosed with attention deficit hyperactivity disorder, and those without that diagnosis. Burwell says, ”Without EEGLAB providing an easy interface to [perform] some more advanced functions... I may not even explore certain avenues of data analysis.” He adds, “It would take days, weeks, or months to produce a satisfying substitute.”

Burwell is the not the only researcher indebted to EEGLAB. Google Scholar shows that the EEGLAB reference paper has 8,420 citations and the software itself was downloaded over 19,000 times just in 2017, with each year yielding more downloads than the last. Currently there are 4,247 opt-in researchers on the EEGLAB discussion mailing list, and 9,265 researchers on the EEGLAB news list, anticipating updates to the code. EEGLAB documentation lives online, but learning all of the possibilities of the software is best done in person. The creators of EEGLAB and foreign organizers provide workshops anywhere between two to five times a year. The workshops are held throughout the world and each workshop accepts forty to one hundred and fifty attendees. Last year, workshops were held in in Mysore in south central India, Tokyo, and Be’er Sheva, Israel. Upcoming workshops will be held in Pittsburgh on September 3rd and 4th and in San Diego November 8-12 following the annual Society for Neuroscience meeting.

Burwell is the not the only researcher indebted to EEGLAB. Google Scholar shows that the EEGLAB reference paper has 8,420 citations and the software itself was downloaded over 19,000 times just in 2017, with each year yielding more downloads than the last. Currently there are 4,247 opt-in researchers on the EEGLAB discussion mailing list, and 9,265 researchers on the EEGLAB news list, anticipating updates to the code. EEGLAB documentation lives online, but learning all of the possibilities of the software is best done in person. The creators of EEGLAB and foreign organizers provide workshops anywhere between two to five times a year. The workshops are held throughout the world and each workshop accepts forty to one hundred and fifty attendees. Last year, workshops were held in in Mysore in south central India, Tokyo, and Be’er Sheva, Israel. Upcoming workshops will be held in Pittsburgh on September 3rd and 4th and in San Diego November 8-12 following the annual Society for Neuroscience meeting.

Perhaps the coolest part about an open source program such as EEGLAB is that it never stops growing and transforming. Users can suggest changes, make bug fixes, and add new functionalities with their own contributions to the code. Currently, over seventy-five plug-in toolboxes for EEGLAB, contributed by labs all over the world, are freely available through the online EEGLAB Extension Manager. The base code itself contains a whopping 125,271 lines of code which works out to 533 stand-alone functions. It is likely to keep changing as more researchers contribute and add suggestions. For instance, Burwell hopes that the EEGLAB code will become compatible and accessible with Python, an open source coding language that continues to incorporate more imaging processing tools.

Perhaps the coolest part about an open source program such as EEGLAB is that it never stops growing and transforming. Users can suggest changes, make bug fixes, and add new functionalities with their own contributions to the code. Currently, over seventy-five plug-in toolboxes for EEGLAB, contributed by labs all over the world, are freely available through the online EEGLAB Extension Manager. The base code itself contains a whopping 125,271 lines of code which works out to 533 stand-alone functions. It is likely to keep changing as more researchers contribute and add suggestions. For instance, Burwell hopes that the EEGLAB code will become compatible and accessible with Python, an open source coding language that continues to incorporate more imaging processing tools.

For now though, the EEGLAB creators are focusing on another new capability for researchers who use the code. The project, funded by the NIH, will allow outside researchers to use the UCSD supercomputer ‘Comet’ to run EEGLAB scripts. The current trend of collecting larger amounts of data and applying computationheavy analyses requires lots of computing cores that some researchers do not have access to. The team hopes that access to Comet will allow labs without high-performance computing to apply the most sophisticated signal processing that EEGLAB has to offer. It is clear that EEGLAB has, and will have, a huge impact on the field of cognitive neuroscience and that it will continue to evolve to meet the needs of the scientists that rely on it for the study of the human brain.

Margot Wohl, UCSD Neuroscience Graduate Student